ExplainerAI™

Analytics for AI Governance in Healthcare

Manage AI Risk and Promote AI Ethics while Fostering Trust with Clinicians and Patients

Unlock Full Transparency for Predictive AI Models

ExplainerAI™ is the first-of-its-kind platform designed to transform AI in healthcare by providing complete AI transparency that fosters trust among clinicians and patients and increases AI adoption.

Structured around the four pillars of the NIH’s AI Governance Framework (trustworthiness, fairness, transparency, and accountability) ExplainerAI™ ensures compliance with AI governance standards, preventing model drift and bias through deep analytics across healthcare AI and ML portfolios. You can read more about how ExplainerAI™ works under the hood.

ExplainerAI™ integrates easily into Epic and other Healthcare Systems offering full visibility into how predictive models make decisions. It allows healthcare organizations to overcome AI black box challenges, and ensure that AI tools are compliant, ethical, trusted, and effective in real-world healthcare settings.

See Comprehensive View of Model Performance

Real-Time Predictive Insights

ExplainerAI™ gives healthcare teams real-time access to predictive risk factors, model configuration parameters, and performance analytics. With an easy-to-read AI dashboard, users can monitor AI performance at both the individual patient and population level.

Model Performance vs. Actual Outcomes

Continuously measure how well the AI model performs in real-world scenarios. ExplainerAI™ helps organizations compare model predictions to actual outcomes, ensuring the predictive accuracy of AI-driven decisions and mitigating risks from AI drift.

Granular Data Exploration

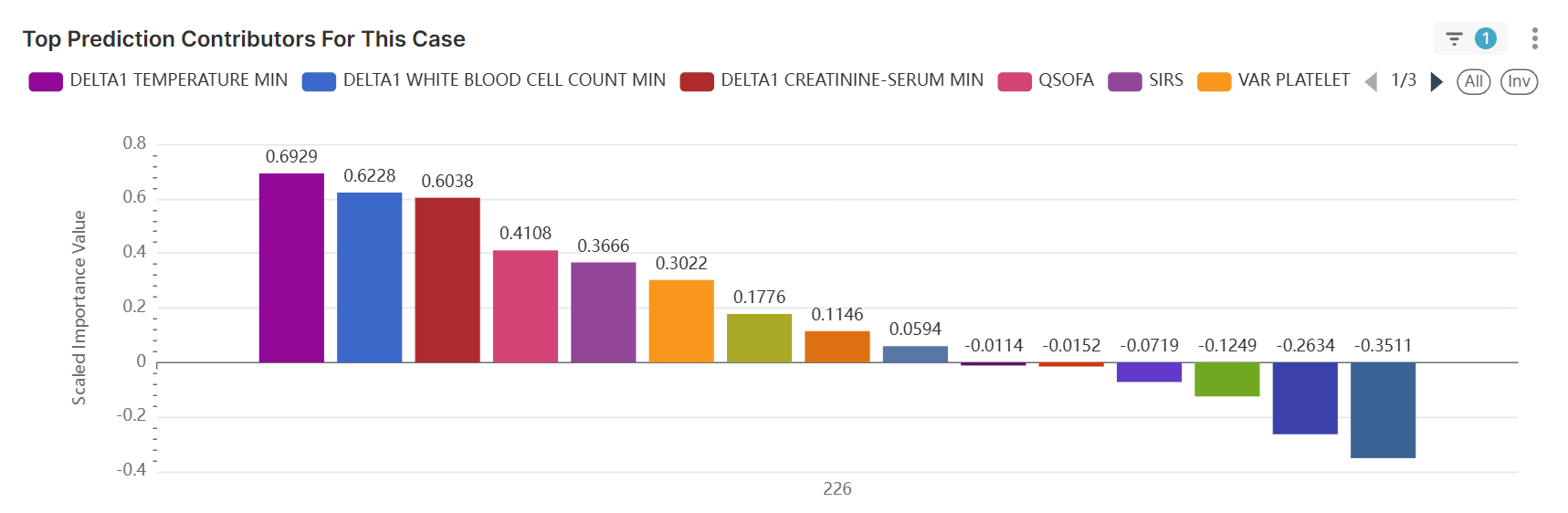

Drill down into AI model performance at granular levels to gain deeper insights into the factors that drive AI decisions, helping clinicians and healthcare teams understand how the model works and why it makes specific predictions.

Demographic and Bias-Free Analysis

Breakdown model performance across key demographics, socio-graphics, and other factors to ensure that no inherent bias towards race, gender, or economic status exists in your model’s predictions.

Want to see how ExplainerAI™ delivers AI transparency?

Promote AI Governance, Compliance, and Ethics

Eliminate AI Bias and Promote Fairness

ExplainerAI™ ensures AI fairness by identifying and mitigating AI bias in model predictions. The platform analyzes model performance across diverse demographic groups—such as race, gender, and socio-economic status—to ensure fairness and prevent unintended consequences.

Meet AI Compliance and Regulatory Standards

With built-in compliance tracking, ExplainerAI™ helps healthcare organizations demonstrate that their AI models meet the highest standards for regulatory compliance, such as HIPAA, and are aligned with leading risk management frameworks and FDA regulations. We provide clear, actionable insights into the model’s decision-making process to support regulatory audits and certifications.

Ensure the Responsible Use of AI

ExplainerAI™ is built with AI ethics in mind. By addressing the ethical implications of AI decision-making, our platform fosters responsible AI adoption. We help organizations prevent AI black box problems by making models explainable and interpretable. ExplainerAI™ ensures that decisions made by your AI are transparent and aligned with ethical guidelines.

Support Clinician Adoption of AI Models

Provide a Clinician-Friendly Interface

ExplainerAI™ is designed with clinicians in mind. Our interface prioritizes ease of use with intuitive dashboards and visualizations, that provide clear, interpretable insights. This helps healthcare professionals understand how AI models make recommendations and motivates them to include AI as a valuable tool in their everyday clinical workflows.

Build Clinician Trust in AI

Building clinician trust in AI models is essential for widespread adoption. ExplainerAI™ fosters trust by offering clear, understandable explanations of how predictions are made and which factors influence those predictions. This transparency supports the seamless integration of AI technologies into healthcare teams, empowering clinicians to leverage AI as a trusted partner in improving patient outcomes.

Integrate AI Seamlessly into Epic and other Healthcare Systems

-

Easy AI Integration

ExplainerAI™ integrates seamlessly with existing healthcare IT systems, including Electronic Health Records (EHRs), Epic, clinical decision support tools, and other healthcare platforms. It ensures that AI integration does not disrupt existing workflows but instead enhances operational efficiency and decision-making capabilities. AI-driven insights are directly accessible within Epic and other EHR dashboards providing clinicians with actionable recommendations fully integrated with their day-to-day tasks.

-

Works with Any AI Model

Whether you use a custom-built model or a third-party solution, ExplainerAI™ works with any AI model to provide transparency and real-time performance insights. This flexibility makes it easy to manage and optimize AI-driven healthcare solutions without starting from scratch.

-

Enhances AI Adoption within Epic

Epic-centric organizations are increasingly leveraging AI models for advanced predictive analytics. With ExplainerAI™, organizations can view and manage AI decision-making processes from within Epic, ensuring every AI recommendation is explainable and interpretable. This promotes AI transparency, helps clinicians trust the model-generated insights, and encourages widespread AI adoption within Epic environments.

-

Built to Open Standards

Built to foster adoption through trust, ExplainerAI™ is designed on the four pillars of NIH’s AI Governance Framework: trustworthiness, fairness, transparency and accountability.

-

Extendable to Business Intelligence Tools

ExplainerAI™ can be extended to leading BI tools like Power BI, Tableau, and others for advanced reporting and visualization. This feature ensures deeper insights into AI performance, helping stakeholders monitor outcomes and make data-driven decisions.

-

Keeps Everything in One Place

See how all of your models perform in one place with one user interface. Don't force your staff to look for model performance in a mosaic of different locations and interfaces.

Want to see how ExplainerAI™ integrates with your EHR system?

Advance Responsible AI in Healthcare

Full AI Model Transparency

Gain a comprehensive view of how AI models operate. ExplainerAI™ details which parameters influence predictions and the magnitude of their effect, providing clarity for healthcare teams.

Predictive Accuracy Insights

Understand how AI models impact patient outcomes by revealing key insights into model behavior and prediction reliability, making it easier to evaluate the effectiveness of AI-driven decisions.

Model Drift Monitoring

See model drift as it happens and re-train your model so that it continues to perform at the top of its game.

Governance and Compliance Assurance

Stay ahead of regulatory requirements by demonstrating how AI models meet the necessary standards for FDA and HIPAA compliance. With ExplainerAI™, organizations can be confident in the model’s decision-making process.

Typical Use Cases

AI and ML Model Optimization

ExplainerAI™ allows you to connect any number of AI or ML models into the platform to manage and optimize performance across your portfolio. Real-time performance analytics and unique governance capabilities prevent model drift and mitigate hallucinations resulting in more intelligent clinical decision making and better patient care. You can read more about an ExplainerAI™ use case at Montefiore Hospital.

Clinician Trust

ExplainerAI™ provides clear insights into how AI models arrive at their predictions, helping clinicians trust AI tools and adopt them confidently in clinical practice.

Governance and Risk Mitigation

AI governance is critical for compliance with healthcare regulations. ExplainerAI™ ensures predictive models comply with HIPAA, FDA, and other standards, mitigating compliance risks and avoiding costly penalties.

Want to see ExplainerAI™ with your use case?

Frequently Asked Questions (FAQs)

AI Governance, Ethics, and Transparency

What is Explainable AI (XAI)?

Explainable AI (XAI) refers to AI models and systems designed to be transparent and interpretable, making their decision-making processes understandable to humans. By providing clear insights into how predictive AI models generate recommendations, XAI mitigates concerns about the AI black box problem and ensures AI fairness. It also promotes AI ethics, as it allows organizations to understand and address any unintended AI bias in the system.

What is AI transparency, and why is it crucial in healthcare?

AI transparency ensures that healthcare professionals understand how AI models make decisions, providing clarity on which factors influence predictions. This is essential for building trust with both clinicians and patients, ensuring AI compliance with regulations like HIPAA and FDA, and preventing issues like AI bias and AI black box problems. By offering transparency, healthcare organizations can enhance the reliability of predictive AI models and improve patient outcomes.

Why is responsible AI in healthcare important and how does ExplainerAI™ support it?

ExplainerAI™ fosters responsible AI by ensuring that AI models are fair, transparent, and ethical. By offering AI transparency and AI interpretability, ExplainerAI™ helps prevent AI bias and promotes AI fairness. It also ensures that AI models align with AI governance frameworks, supporting AI compliance and responsible AI practices that enhance patient care and decision-making.

How does ExplainerAI™ help improve AI governance and ensure compliance?

ExplainerAI™ enhances AI governance by offering tools for monitoring, auditing, and evaluating AI models. By ensuring that AI models comply with AI governance frameworks and regulatory standards such as HIPAA and FDA, ExplainerAI™ helps organizations maintain ethical standards and regulatory compliance. It provides clear visibility into the model’s decision-making process, supporting transparency and accountability in healthcare settings.

What role does AI ethics play in healthcare, and how does ExplainerAI™ address it?

AI ethics ensures that AI models make fair, transparent, and accountable decisions. ExplainerAI™ addresses AI ethics by providing AI transparency and AI interpretability, helping healthcare organizations avoid discriminatory practices like AI bias. By promoting AI fairness, AI governance, and responsible AI, ExplainerAI™ ensures that AI models align with both ethical guidelines and regulatory standards.

How does ExplainerAI™ help reduce AI bias in healthcare?

ExplainerAI™ reduces AI bias by breaking down AI model performance across key demographic and socio-economic factors. This ensures that AI models are fair and make equitable predictions across diverse patient populations. By promoting AI fairness and providing full AI transparency, ExplainerAI™ ensures that healthcare decisions made by AI models are free from bias and align with ethical standards.

What is the AI black box problem and how does ExplainerAI™ solve it?

The AI black box problem occurs when the decision-making process of AI models is opaque, making it difficult for clinicians to understand how predictions are made. ExplainerAI™ solves this by providing explainable AI, offering clear insights into how AI models operate and which factors influence predictions. This promotes AI transparency, builds trust among clinicians, and enhances the adoption of AI in healthcare.

How does ExplainerAI™ ensure AI performance and prevent AI model drift?

ExplainerAI™ continuously monitors AI model performance to detect and prevent AI drift—a decrease in model accuracy over time. By tracking changes in model behavior and updating models as needed, ExplainerAI™ ensures that predictive AI models deliver reliable, consistent results. This ongoing AI evaluation is crucial for maintaining model performance and ensuring that healthcare decisions remain accurate and aligned with clinical objectives.

ExplainerAI™ Integration, Setup and Configuration

How does ExplainerAI™ integrate with existing healthcare systems?

ExplainerAI™ is designed for seamless AI integration with existing healthcare IT systems like EHRs, EPIC, clinical decision support tools, and other platforms. This integration ensures minimal disruption to workflows, allowing healthcare organizations to enhance their systems with predictive AI models and AI dashboards while maintaining full transparency and AI compliance.

Does ExplainerAI™ integrate with Epic EHR systems?

Yes, ExplainerAI™ integrates seamlessly with Epic EHR systems, allowing healthcare organizations to easily adopt predictive AI models within their existing workflows. By connecting with Epic’s clinical environment, ExplainerAI™ provides AI transparency and actionable insights directly within the Epic dashboard. This integration ensures that clinicians can access clear, interpretable AI-driven recommendations without disrupting their daily tasks, promoting AI adoption across healthcare teams.

Can ExplainerAI™ work with both Epic's native AI tools and custom AI models?

Yes, ExplainerAI™ is highly flexible and can integrate with both Epic's native AI tools and custom AI models developed in-house or by third-party vendors. This enables Epic-centric organizations to enhance their AI models with full transparency, ensuring that all AI-driven insights are explainable and interpretable, regardless of the model’s origin.

What data feeds are required to set up ExplainerAI™?

To set up real-time predictive models, ExplainerAI™ needs access to an HL7 feed. HL7 is the standard for exchanging healthcare information between systems and allows ExplainerAI™ to pull the necessary data for real-time analysis. This flexibility ensures that ExplainerAI™ can work with your existing data infrastructure without requiring major changes to your current setup.

How long does it typically take to integrate ExplainerAI™?

The time required for integration depends on the complexity of your existing system. However, assuming the model is already implemented and data points are available, integration with ExplainerAI™ typically takes about 1 workday. This quick setup ensures minimal disruption to your operations and allows your team to start benefiting from AI transparency and real-time monitoring right away. Our team works closely with you to ensure a smooth integration process.

Is ExplainerAI™ hosted on Cognome Cloud or on the customer’s network?

ExplainerAI™ can be hosted in both environments, and both options provide the same level of performance, security, and transparency. Hosting on Cognome Cloud, offers the benefits of scalability and easy access to real-time insights. Hosting on-premise within your organization’s network keeps data and systems internal. This flexibility ensures that ExplainerAI™ can be tailored to your organization's needs, whether you prioritize cloud-based convenience or the control offered by on-premise installations.

ExplainerAI™ Usage and Benefits

Is ExplainerAI™ easy to use for clinicians and healthcare professionals?

Yes, ExplainerAI™ is designed with clinicians in mind. The platform features intuitive dashboards and clear visualizations, enabling healthcare professionals to easily interpret AI-driven recommendations and feel confident in their decision-making.

What are the key benefits of using ExplainerAI™ in healthcare?

ExplainerAI™ offers several key benefits for healthcare organizations. It enhances AI transparency by providing a clear view of the decision-making process, from data inputs to final predictions. This transparency enables clinicians to confidently leverage AI insights, improving patient outcomes

By removing opacity, ExplainerAI™ also fosters adoption across organizations, building trust among healthcare teams and facilitating broader AI adoption.

Finally, ExplainerAI™’s streamlined AI integration reduces training time and simplifies the management of AI model compliance, saving time and resources.

If my model is already integrated into my workflow, what’s the value of ExplainerAI™?

Even if your AI model is already integrated into your workflow, ExplainerAI™ provides additional value by offering real-time monitoring of the model’s performance. It allows you to better understand how each input contributes to the model’s output, helping healthcare teams make more informed decisions. Additionally, ExplainerAI™ provides insights into AI bias, allowing organizations to evaluate how models may be influenced, and ensuring that AI fairness is maintained. By providing AI transparency and deeper insights into model behavior, ExplainerAI™ enhances the effectiveness and trustworthiness of existing AI systems.

How does Explainable AI benefit healthcare professionals and patients?

Explainable AI provides clear, understandable insights into how AI models arrive at their predictions. This transparency helps clinicians trust AI-driven recommendations, which is vital for AI adoption in healthcare. It also supports patient trust by making the decision-making process more accessible, ensuring that healthcare providers make informed, fair, and ethical decisions. This reduces resistance to AI integration and fosters responsible AI practices.

How does ExplainerAI™ ensure patient trust in AI-powered healthcare?

Patient trust in AI models is critical for their successful integration into healthcare. ExplainerAI™ ensures patient trust by providing full AI transparency and clear explanations of how AI-driven predictions are made. This transparency allows patients to understand the basis of decisions impacting their care, ensuring that AI models are ethical, fair, and aligned with clinical best practices, leading to more confident engagement in AI-assisted healthcare.

Still Have Questions?

Use the "Contact Us" form below to request a demo and discuss your specific needs. We will walk you through the platform’s features and show how ExplainerAI™ can help you improve AI governance, compliance, and patient outcomes.